Afterthought: This kind of brainrot, the petty middle-management style of ends justifying the means, is symbiotic with pundit brainrot, the mentality that Jamelle Bouie characterizes thusly.

It is sometimes considered gauche, in the world of American political commentary, to give words the weight of their meaning. As this thinking goes, there might be real belief, somewhere, in the provocations of our pundits, but much of it is just performance, and it doesn’t seem fair to condemn someone for the skill of putting on a good show.

Both reject the idea that words mean things, dammit, a principle that some of us feel at the spinal level.

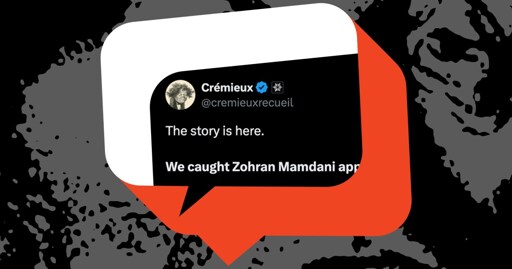

I don’t know why they decided to put an AI slop image right up top in their banner and then repeat it later.

Yeah, the washed-out faux-Ghibli aEStheTiC was a bit of a clue. Gonna go scrub my brains with the Tenniel illustrations for Alice now, with a Ralph Steadman chaser.