Our results show that women’s contributions tend to be accepted more often than men’s [when their gender is hidden]. However, when a woman’s gender is identifiable, they are rejected more often. Our results suggest that although women on GitHub may be more competent overall, bias against them exists nonetheless.

They didn’t use very comprehensive research methods. Also they only used Github.

First, from the GHTorrent data set, we extract the email addresses of GitHub users. Second, for each email address, we use the search engine in the Google+ social network to search for users with that email address. Third, we parse the returned users’ ‘About’ page to scrape their gender.

a bias against men exists, that is, a form of reverse discrimination.

How is it reverse discrimination. It’s still plain old discrimination. I’m starting to smell a biased research here. Or at least the researchers have a bias.

Clearly discrimination is only against woman thus reverse discrimination is against man. /s

any bias that doesn’t confirm my bias is reverse bias

see also: reverse racism

Thanks for pointing it out. There is clearly room for a lot of error.

Anyone found the specific numbers of acceptance rate with in comparison to no knowledge of the gender?

On researchgate I only found the abstract and a chart that doesn’t indicate exactly which numbers are shown.

edit:

Interesting for me is that not only women but also men had significantly lower accepance rates once their gender was disclosed. So either we as humans have a really strange bias here or non binary coders are the only ones trusted.

edit²:

I’m not sure if I like the method of disclosing people’s gender here. Gendered profiles had their full name as their user name and/or a photography as their profile picture that indicates a gender.

So it’s not only a gendered VS. non-gendered but also a anonymous VS. indentified individual comparison.

And apparantly we trust people more if we know more about their skills (insiders rank way higher than outsiders) and less about the person behind (pseudonym VS. name/photography).

Thanks for grabbing the chart.

My Stats 101 alarm bells go off whenever I see a graph that does not start with 0 on the Y axis. It makes the differences look bigger than they are.

The ‘outsiders, gendered’ which is the headline stat, shows a 1% difference between women and men. When their gender is unknown there is a 3% difference in the other direction (I’m just eyeballing the graph here as they did not provide their underlying data, lol wtf ). So, overall, the sexism effect seems to be about 4%.

That’s a bit crap but does not blow my hair back. I was expecting more, considering what we know about gender pay gaps, etc.

…or the research is flawed. Gender identity was gained from social media accounts. So maybe it’s a general bias against social media users (half joking).

I think (unless I misunderstood the paper), they only included people who had a Google+ profile with a gender specified in the study at all (this is from 2016 when Google were still trying to make Google+ a thing).

Perhaps ppl who keep their githubs anonymous simply tend to be more skilled. Older, more senior devs grew up in an era where social medias were anonymous. Younger, more junior devs grew up when social medias expect you to put your real name and face (Instagram, Snapchat, etc.).

More experienced devs are more likely to have their commits accepted than less experienced ones.

We ass-u-me too much based on people’s genders/photographs/ideas/etc., which taints our objectivity when assessing the quality of their code.

For a close example on Lemmy: people refusing to collaborate with “tankie” devs, with no further insight on whether the code is good or not.

There also used to be code licensed “not to be used for right wing purposes”, and similar.

That’s not an excellent example. If they refuse to collaborate with them, and also don’t make any claims about the quality of code, then the claim that their objectivity in reviewing code is tainted doesn’t hold.

Their objectivity is preempted by a subjective evaluation, just like it would be by someone’s appearance or any other perception other than the code itself.

Thank you. Unfortunately, your link doesn’t work either - it just leads to the creative commons information). Maybe it’s an issue with Firefox Mobile and Adblockers. I’ll check it out later on a PC.

Looking at their comment history they seem to allways include that link to the CC license page in some attempt to prevent the comments from being used with AI.

I have no idea of if that is actually a thing or just a fad, but that was the link.

Thanks for pointing that out.

Seems like a wild idea as… a) it poisons the data not only for AI but also real users like me (I swear I’m not a bot :D). b) if this approach is used more widely, AIs will learn very fast to identify and ignore such non-sense links and probably much faster than real humans.

It sounds like a similar concept as captchas which annoy real people, yet fail to block out bots.

Yeah, that is my take as well, at first I thought it was completely useless just like the old Facebook posts with users posting a legaliese sounding text on their profile trying to reclaim rights that they signed away when joining facebook, but here it is possible that they are running their own instance so there is no unified EULA, which gives the license thing a bit more credibillity.

But as you say, bots will just ignore the links, and no single person would stand a chance against big AI with their legal teams, and even if they won the AI would still have been trained on their data, and they would get a pittance at most.

Page 15 of the pdf has this chart

(note the vertical axis starts at 60% acceptance rate)

60% acceptance rate baseline? Doubt!

Their link wasn’t to the paper but to the license to poison possible AIs training their models on our posts. Idk if that actually is of any use though

How does a photograph disclose someone’s gender?

The study differentiates between male and female only and purely based on physical features such as eye brows, mustache etc.

I agree you can’t see one’s gender but I would say for the study this can be ignored. If you want to measure a bias (‘women code better/worse than men’), it only matters what people believe to see. So if a person looks rather male than female for a majority of GitHub users, it can be counted as male in the statistics. Even if they have the opposite sex, are non-binary or indentify as something else, it shouldn’t impact one’s bias.

Through gender stereotypes. The observer’s perception is all that counts in this case anyways.

This research isn’t peer reviewed so I wouldn’t take it at face value just yet.

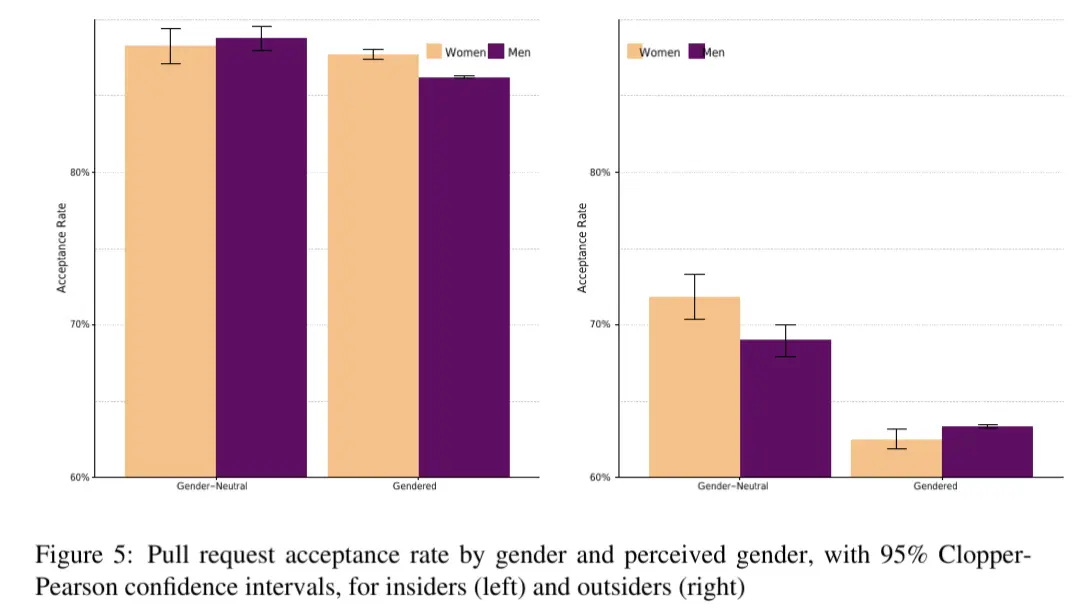

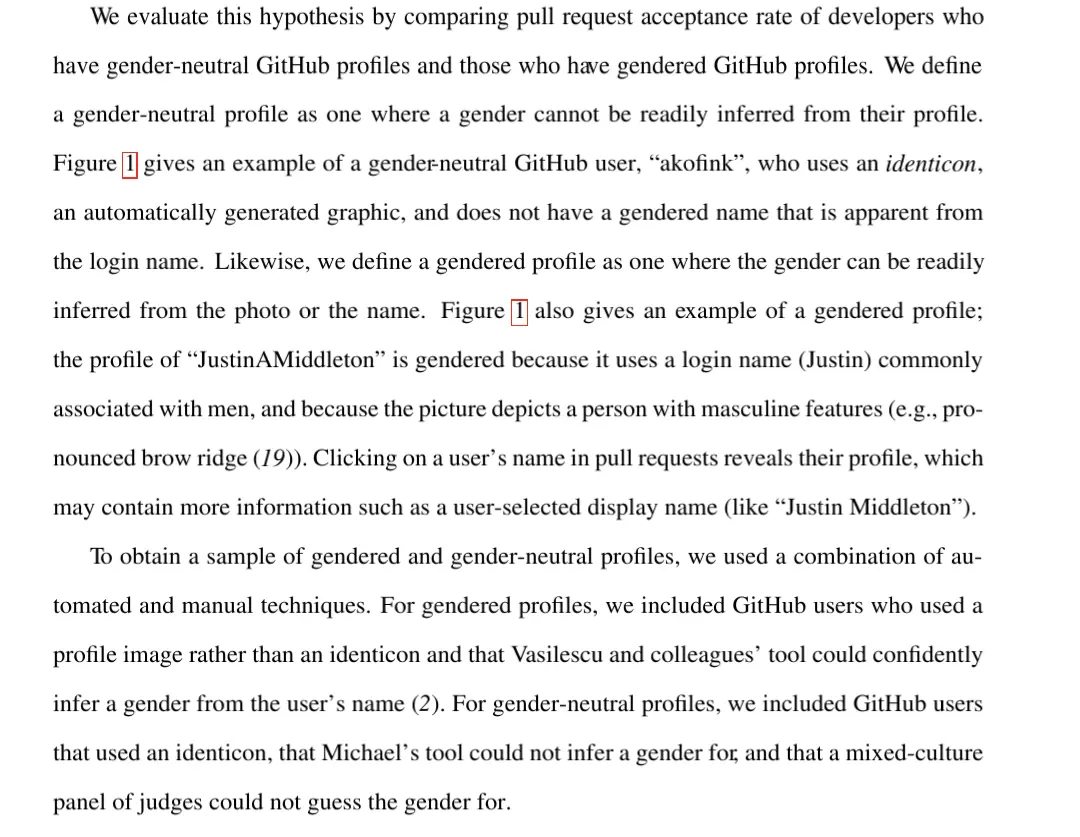

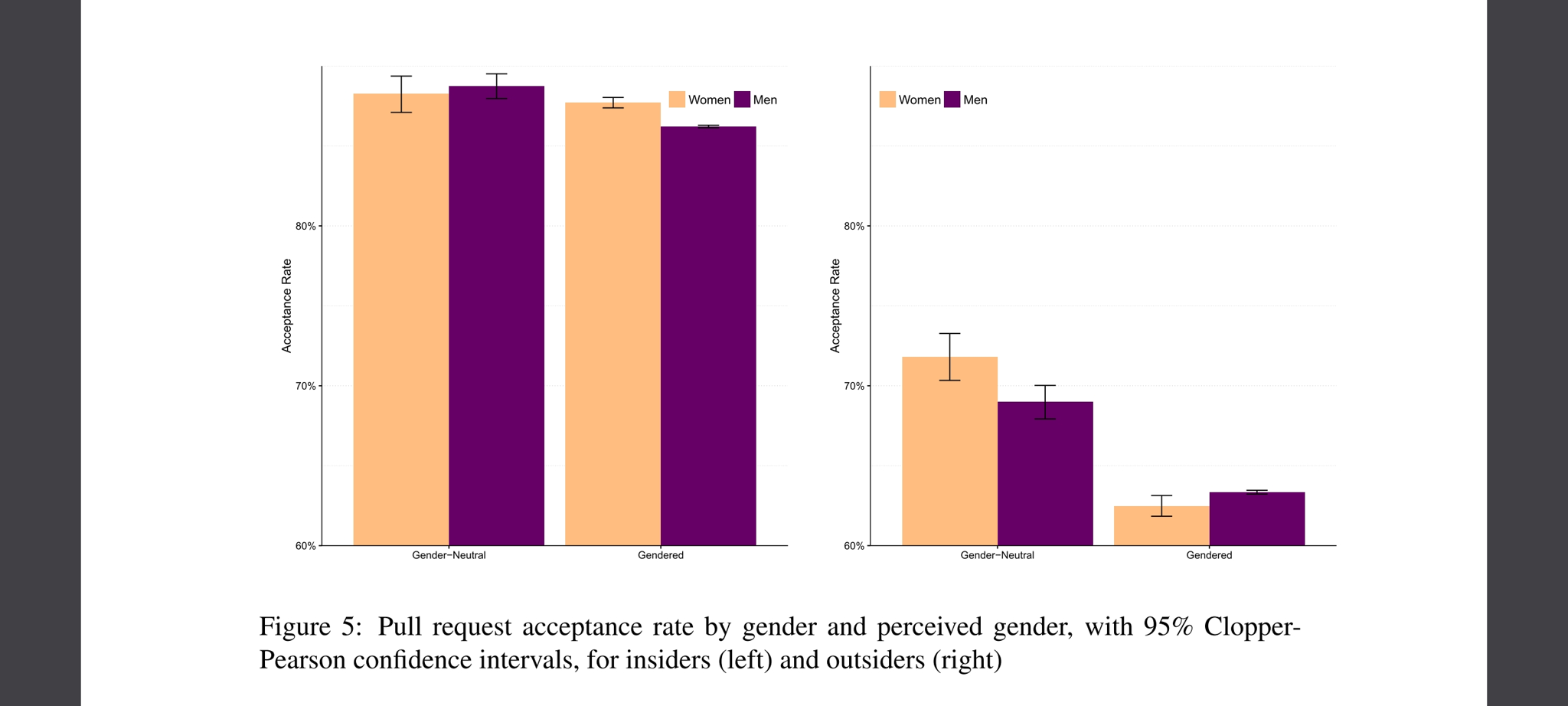

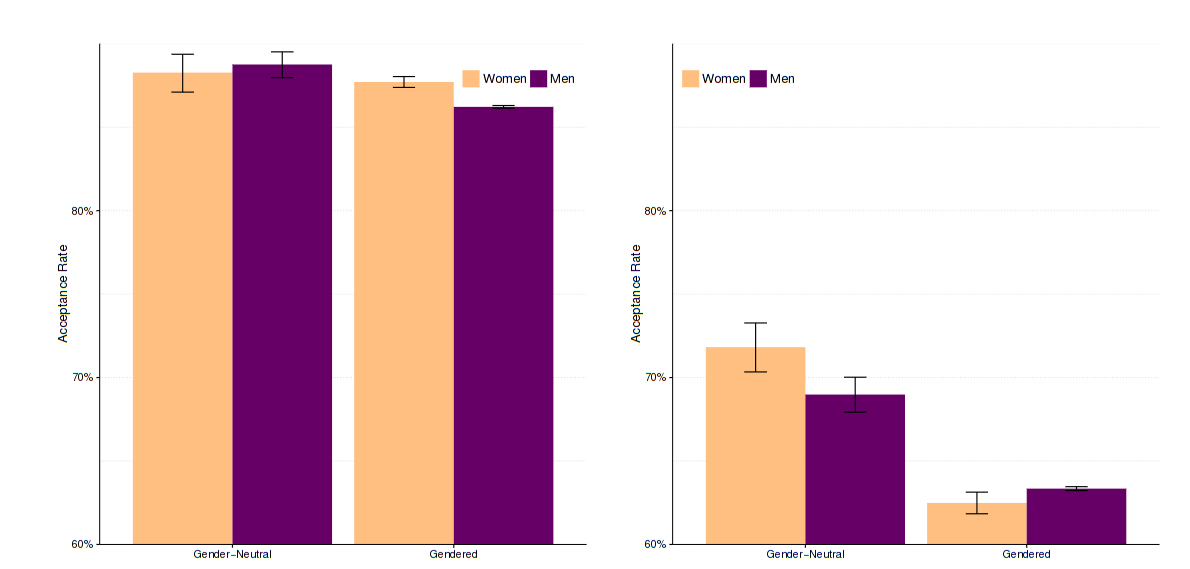

I think the most striking thing is that for outsiders (i.e. non repo members) the acceptance rates for gendered are lower by a large and significant amount compared to non-gendered, regardless of the gender on Google+.

The definition of gendered basically means including the name or photo. In other words, putting your name and/or photo as your GitHub username is significantly correlated with decreased chances of a PR being merged as an outsider.

I suspect this definition of gendered also correlates heavily with other forms of discrimination. For example, name or photo likely also reveals ethnicity or skin colour in many cases. So an alternative hypothesis is that there is racism at play in deciding which PRs people, on average, accept. This would be a significant confounding factor with gender if the gender split of Open Source contributors is different by skin colour or ethnicity (which is plausible if there are different gender roles in different nations, and obviously different percentages of skin colour / ethnicity in different nations).

To really prove this is a gender effect they could do an experiment: assign participants to submit PRs either as a gendered or non-gendered profile, and measure the results. If that is too hard, an alternative for future research might be to at least try harder to compensate for confounding effects.

You’re suggesting that devs trust anon devs more?

Devs focus more on the code of anon devs, instead of wasting time on their gender, race, or whatever.

deleted by creator

From the post’s link:

We hypothesized that pull requests made by women are less likely to be accepted than those made by men. Prior work on gender bias in hiring – that women tend to have resumes less favorably evaluated than men (5) – suggests that this hypothesis may be true.

To evaluate this hypothesis, we looked at the pull status of every pull request submitted by women compared to those submitted by men. We then calculate the merge rate and corresponding confidence interval, using the Clopper-Pearson exact method (15), and find the following:

Open Closed Merged Merge Rate 95% Confidence Interval Women 8,216 21,890 111,011 78.6% [78.45%, 78.87%] Men 150,248 591,785 2,181,517 74.6% [74.56%, 74.67%] 4 percentage point difference overall.

Pull requests can be made by anyone, including both insiders (explicitly authorized owners and collaborators) and outsiders (other GitHub users). If we exclude insiders from our analysis, the women’s acceptance rate (64.4%) continues to be significantly higher than men’s (62.7%) (χ2(df = 2, n = 2, 473, 190) = 492, p < .001)

Emphasis mine. that’s 1.7 percentage points.

The final paragraph also omits how the acceptance changes after gender is “revealed” (username, profile image). The graph doesn’t help either

For outsiders, we see evidence for gender bias: women’s acceptance rates are 71.8% when they use gender neutral profiles, but drop to 62.5% when their gender is identifiable. There is a similar drop for men, but the effect is not as strong. Women have a higher acceptance rate of pull requests overall (as we reported earlier), but when they’re outsiders and their gender is identifiable, they have a lower acceptance rate than men.

So women drop from 71.8% to 62.5% = 9,3 percentage points, and they say it’s more than men, but don’t reveal the difference. Only graph has an indication (unless I’m missing a table) and it may be 5 (?) percentage points for men. Which would be about 4 percentage points between both genders.

Figure 5: Pull request acceptance rate by gender and perceived gender, with 95% Clopper-Pearson confidence intervals, for insiders (left) and outsiders (right) The conclusion:

Our results suggest that although women on GitHub may be more competent overall, bias against them exists nonetheless.

That’s quite exaggerated for <=5 percentage points. Especially for the number of people involved.

Out of 4,037,953 GitHub user profiles with email addresses, we were able to identify 1,426,121 (35.3%) of them as men or women through their public Google+ profiles.

Maybe I missed it, but how many of those were women and how many made PRs?

in a 2013 survey of the more than 2000 open source developers who indicated a gender, only 11.2% were women

Let’s compare the PR rate per gender:

Let’s say the percentage of women did not increase since 2013, which I’d find difficult to believe, that’s 1,269,247 men and 156,873 women. Men made 150,248 + 591,785 + 2,181,517 = 2,923,550 PRs. Women made 8,216 + 21,890 + 111,011 = 141,117 PRs. That’s ~2.3 PRs per man and ~0,9 PRs per woman. If the percentage changed and more women became contributors, that would decrease the PRs per woman.

That leads me to ask:

- are women more hesitant to contribute PRs that might not be merged? if so, it might contribute to why their PRs are merged more often

- are the women with accounts on github more likely to be people who have some kind of education in the IT field? if there are less hobbyist women (percentage-wise) on github, and more hobbyist men who just chuck their stuff online then decide to contribute to a project, it might contribute to PR acceptance (you’re comparing pros to amateurs)

- what does a similar acceptance rate for double the amount of PRs for men actually say? I don’t know, but it might be pertinent.

I very much encourage humans to contribute to opensource. So, while this paper says something about the current state of things, it doesn’t seem like it’s saying much. The differences in pull request acceptance are not very significant (<5 percentage points) to me

Out of 4,037,953 GitHub user profiles with email addresses, we were able to identify 1,426,121 (35.3%) of them as men or women through their public Google+ profiles.

Could be a confounding variable in that the type of people who reveal their gender publicly might differ from those who don’t in some way that is also related to their contribution quality

That might also be the case, but that then raises the question of the quality of PRs in order to judge the contribution quality of “anonymous” contributors.

I wonder if experiences from 12 years ago and numbers from 8 years ago still hold true as of today.

It’s worth trying.

Obligatory mentions of the replication crisis and the Hierarchy of evidence.

Bias against women exists (I remember hearing various “insights” about women IRL from men) but i think something else is going on, reportedly even people who report they are men get “discriminated”, maybe it’s just people who are extroverted show their gender and that slightly correlates with lower quality?

Also i remember reading some woman saying she does not show her gender online to avoid harassment, maybe that also correlates (younger woman not having that insight that not showing your gender could be a net win for the preferences they have)

Here’s the final peer reviewed version https://peerj.com/articles/cs-111/.

Really great comments at https://peerj.com/articles/cs-111/reviews/. The reviewers are very nice about it but do point out some big issues towards the end.

Why would someone know your gender, out your identity?

I mean it’s not exactly uncommon nowadays, there’s tons of commenters on this post that have their pronouns displayed next to their username

I figured it would look more professional, and it would also let me separate the contributions I made with my more anonymous GitHub out—not too sure how closely they investigate your previous contributions and how good your code was.

I am guessing this was not a good choice, and I should have just continued using my more anonymous GitHub, or made the account as JSmith instead of JaneSmith.