Damn, I was just looking into and learning about the different main compression (gzip, bzip, xz) algorithms the other week. I guess this is why you stick to the ol’ reliable gzip even if it’s not the most space efficient.

Genuinely crazy to read that a library this big would be intentionally sabotaged. Curious if xz can ever win back trust…

Can anyone help me understand xz vs Zstd?

Technically, XZ is just a container that allows for different compression methods inside, much like the Matroska MKV video container. In practice, XZ is modified LZMA.

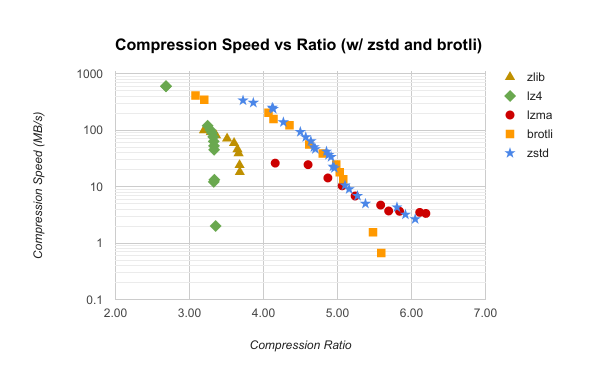

There is no perfect algorithm for every situation, so I’ll attempt to summarize.

- Gzip/zlib is best when speed and support are the primary concerns

- Bzip2 was largely phased out and replaced by XZ (LZMA) a decade ago

- XZ (LZMA) will likely give you the best compression, with high CPU and RAM usage

- Zstd is… really good, and the numerous compression levels offer great flexibility

The chart below, which was sourced from this blog post, offers a nice visual comparison.

Thanks for this! Good to know that Zstd seems to be a pretty much drop in replacement.

It looks like someone made a Rust implementation, which is a lot slower and only does decompression, but it’s at least a rival implementation should zstd get some kind of vulnerability.

Yep that would be me :)

There is also an independent implementation for golang, which even does compression iirc (there is also a golang implementation by me but don’t use that. It’s way way slower than the other one and unmaintained since I switched to rust development)

Awesome! It’s impressive that it’s decently close in performance with no unsafe code. Thanks for your hard work!

And that Go implementation is pretty fast too! That’s quite impressive.

Sadly it does have one place with unsafe code. I needed a ringbuffer with an efficient “extend from within” implementation. I always wanted to contribute that to the standard library to actually get to no unsafe.

Ah, I saw a PR from like 3 years ago that removed it, so it looks like you added it back in for performance.

Have you tried contributing it upstream? I’m not a “no unsafe” zealot, but in light of the xz issue, it would be nice.

It’s too bad xz is really great for archival.

(/s but I guess kinda not) state-actor weapon compression library vs Meta/FB compression library. Zstd is newer, good compression and decompression, but new also means not as widely used.

On the other hand, whether you trust a government more or less than Facebook/Meta is on your conscience.

Certainly not going back to that /s “state-actor weapon compression library” until it’s picked up by Red Hat or the like…

I guess gzip is good enough for me and my little home lab

This is the best summary I could come up with:

Today’s disclosure of XZ upstream release packages containing malicious code to compromise remote SSH access has certainly been an Easter weekend surprise…

The situation only looks more bleak over time with how the upstream project was compromised while now the latest twist is GitHub disabling the XZ repository in its entirety.

In any event, a notable step given today’s slurry of news albeit in the disabled state makes it more difficult to track down other potentially problematic changes by the bad actor(s) with access to merge request data and other pertinent information blocked.

With upstream XZ having not issued any corrected release yet and contributions by one of its core contributors – and release creators – over the past two years called into question, it’s not without cause to outright taking the hammer to the XZ repository public access.

Some such as within Fedora discussions have raised the prospects whether XZ should be forked albeit there still is the matter of auditing past commits.

Others like Debian have considered pulling back to the latest release prior to the bad actor and then just patching vetted security fixes on top.

The original article contains 282 words, the summary contains 189 words. Saved 33%. I’m a bot and I’m open source!

This kinda makes sense, corporately. It’s technically hosting insecure malicious code… maybe they don’t want the liability of redistributing that, even in the git history.

It also means that Microsoft has unprecedented control over the life of any open source project still hosted on GitHub.

If you don’t host your own git server, this is always true.

“Unprecedented” except for every single repo that’s ever been hosted on a platform the authors didn’t own. There’s far better battles to pick.

Whoever paying for the server will have control, Microsoft or not.