This requires local access to do and presently an hour or two of uninterrupted processing time on the same cpu as the encryption algorithm.

So if you’re like me, using an M-chip based device, you don’t currently have to worry about this, and may never have to.

On the other hand, the thing you have to worry about has not been patched out of nearly any algorithm:

The second comment on the page sums up what I was going to point out:

I’d be careful making assumptions like this ; the same was true of exploits like Spectre until people managed to get it efficiently running in Javascript in a browser (which did not take very long after the spectre paper was released). Don’t assume that because the initial PoC is time consuming and requires a bunch of access that it won’t be refined into something much less demanding in short order.

Let’s not panic, but let’s not get complacent, either.

That’s the sentiment I was going for.

There’s reason to care about this but it’s not presently a big deal.

I mean, unpatchable vulnerability. Complacent, uncomplacent, I’m not real sure they look different.

Can’t fix the vulnerability, but can mitigate by preventing other code from exploiting the vulnerability in a useful way.

Sure. Unless law enforcement takes it, in which case they have all the time in the world.

Yup, but they’re probably as likely to beat you up to get your passwords.

It still requires user level access, which means they have to bypass my login password first, which would give them most of that anyways.

Am I missing something?

Shady crypto mining apps for starters

Yes, if you install malware it can be malware.

This specifically was in response to a claim about the police taking your laptop despite the fact that it doesn’t appear to enhance their ability to do anything with possession of your laptop until they are able to bypass a password.

Ah yes, good old Rubber-hose cryptanalysis.

I want to say “passkeys” but if I’m honest, that too is susceptible to this attack.

So if someone somehow gets hold of the device then it is possible?

It depends, some M-devices are iOS and iPadOS devices, which would have this hardware issue but don’t have actual background processing, so I don’t believe it’s possible to exploit it the way described.

On Mac, if they have access to your device to be able to set this up they likely have other, easier to manage, ways to get what they want than going through this exploit.

But if they had your device and uninterrupted access for two hours then yes.

Someone who understands it all more than I do could chime in, but that’s my understanding based on a couple of articles and discussions elsewhere.

So it’s been a while since I had my OS and microcomputer architecture classes, but it really looks like GoFetch could be a real turd in the punch bowl. It appears like it could be on par with the intel vulns of recent years.

which would have this hardware issue but don’t have actual background processing

So I’ve read the same about iOS only allowing one user-space app in the foreground at a time, but… that still leaves the entirety of kernal-space processes allowed to run at anytime they want. So it’s not hard to imagine that a compromised app could be running in the foreground, all the the while running GoFetch trying to mine, while the OS might be shuffling crypto keys in the background on the same processor cluster.

The other thing I’d like to address, is you’re assuming this code would necessarily require physical access to compromise a machine. That is certainly one vector, but I’d posit there’s other simpler ways to do the same. The two that come to mind really quick, are (1) a compromised app via official channels like the app store, or even more scary, (2) malicious javascript hidden on compromised websites. The white paper indicates this code doesn’t need root, it only needs to be executed on the same cluster where the crypto keys keep passing through by chance; so either of these vectors seem like very real possibilities to me.

Edit to add:

I seem to recall reading a paper on the tiktok apps with stock installation were actually polyglot, in that the app would actually download a binary after installation, such that what’s executed on an end user’s machine is not what went through the app store scanners. I had read of the same for other apps using a similar technique for mini-upgrades, which is a useful way to not have to go through app store approval everytime you need to roll out a hotfix or that latest minor feature.

If these mechanisms haven’t already been smacked down by apple/google, or worse, aren’t detectable by apple/google, this could be a seriously valuable tool for state level actors able to pull off the feat of hiding it in plain sight. I wonder if this might be part of what congress was briefed about recently, and why it was a near unanimous vote to wipe out tiktok. “Hey congress people, all your iphones are about to be compromised… your tinder/grindr/onlyfans kinks are about to become blackmail fodder.”

Doesn’t it require a separate process to be using the cryptographic algorithm in the first place in order to fill the cache in question?

If it’s done in-process of a malicious app that you’re running, why wouldn’t the app just steal your password and avoid all of this in the first place?

An efficient and fast version of this in Javascript would be worrisome. But as-is it’s not clear if this can be optimized to go faster than 1-2 uninterrupted hours of processing, so hopefully that doesn’t end up being the case.

Doesn’t it require a separate process to be using the cryptographic algorithm in the first place in order to fill the cache in question?

Yes, that’s my understanding. I haven’t looked at the code, but their high level explanation sounds like their app is making calls to an API which could result in the under-the-hood crypto “service” pulling the keys into the cache, and there’s an element of luck to whether they snag portions of the keys at that exact moment. So it seems like the crafted app doesn’t have the ability to manipulate the crypto service directly, which makes sense if this is only a user-land app without root privileges.

why wouldn’t the app just steal your password and avoid all of this in the first place?

I believe it would be due to the app not having root privileges, and so being constrained with going through layers of abstraction to get its crypto needs met. I do not know the exact software architecture of iOS/macOS, but I guarantee there’s a notion of needing to call an API for these types things. For instance, if your app needs to push/pull an object it owns in/out of iCloud, you’d call the API with a number of arguments to do so. You would not have the ability to access keys directly and perform the encrypt/decrypt all by yourself. Likewise with any passwords, you would likely instead make an API call and the backing code/service would have that isolated/controlled access.

Fetching remote code isn’t allowed on the play store at least, though I’m not sure how well they’re enforcing that.

That’s the reason termux isn’t updated in the play store anymore iirc, it has its own package manager that downloads and runs code.

Yeah I don’t think this is a big-ish problem currently. But by having this vulnerability to point to, other CPU vendors have a good reason not to include this feature in their own chips.

What I’m worried about is Apple overreacting and bottlenecking my M3 pro because “security”. We already saw how fixes for these types of vulnerabilities on Intel and AMD silicon affected performance; no thank you.

First, we need to see how the exploit evolves.

Second, even if it is not exploitable in a malicious way, this could be great for jailbreaking more modern Apple devices and circumvent Apple’s bullshit.

Oops

Whoopsie

Apple’s gonna need you to get ALL the way off their back about this.

Oh sorry, lemme get off that thing!

Apple is not a secure ecosystem.

No system is free from vulnerabilities.

No system is perfect, sure, but rolling their own silicon was sorta asking for this problem.

As opposed to what? Samsung, Intel, AMD and NVIDIA and others are also “rolling their own silicon”. If a vulnerability like that was found in intel it would be much more problematic.

This particular class of vulnerabilities, where modern processors try to predict what operations might come next and perform them before they’re actually needed, has been found in basically all modern CPUs/GPUs. Spectre/Meldown, Downfall, Retbleed, etc., are all a class of hardware vulnerabilities that can leak crypographic secrets. Patching them generally slows down performance considerably, because the actual hardware vulnerability can’t be fixed directly.

It’s not even the first one for the Apple M-series chips. PACMAN was a vulnerability in M1 chips.

Researchers will almost certainly continue to find these, in all major vendors’ CPUs.

How much slower is s CPU without this functionality built in?

The patch for meltdown results in a performance hit of between 2% and 20%. It’s hard to pin down an exact number because it varies both by CPU and use case.

It basically varies from chip to chip, and program to program.

Speculative execution is when a program hits some kind of branch (like an if-then statement) and the CPU just goes ahead and calculates as if it’s true, and progresses down that line until it learns “oh wait it was false, just scrub all that work I did so far down this branch.” So it really depends on what that specific chip was doing in that moment, for that specific program.

It’s a very real performance boost for normal operations, but for cryptographic operations you want every function to perform in exactly the same amount of time, so that something outside that program can’t see how long it took and infer secret information.

These timing/side channel attacks generally work like this: imagine you have a program that tests if variable X is a prime number, by testing if every number smaller than X can divide evenly, from 2 on to X. Well, the bigger X is, the longer that particular function will take. So if the function takes a really long time, you’ve got a pretty good idea of what X is. So if you have a separate program that isn’t allowed to read the value of X, but can watch another program operate on X, you might be able to learn bits of information about X.

Patches for these vulnerabilities changes the software to make those programs/function in fixed time, but then you lose all the efficiency gains of being able to finish faster, when you slow the program down to the weakest link, so to speak.

Also the article states it is found in intels chips too. So not really any better if they had stayed on that pathway either

I’ve just finished reading the article, it does not say this. It says Intel also has a DMP but that only Apple’s version has the vulnerability.

Just reread it. You are indeed correct.

As I understand it, all DMPs of this type are subject to the vulnerability and so intel (and the newest m3) selectively disable it during cryptographic operations

Nope, dmp can treat value as pointer but doesn’t need to. Intel doesn’t, and because of that it’s not vulnerable. But just in case they also provide a way to disable dmp

This issue is extremely similar to problems found with both Intel and AMD processors too (see: Meltdown, Spectre).

“Govt-mandated backdoor in Apple chips revealed”

There, fixed that for you.

Wow, what a dishearteningly predictable attack.

I have studied computer architecture and hardware security at the graduate level—though I am far from an expert. That said, any student in the classroom could have laid out the theoretical weaknesses in a “data memory-dependent prefetcher”.

My gut says (based on my own experience having a conversation like this) the engineers knew there was a “information leak” but management did not take it seriously. It’s hard to convince someone without a cryptographic background why you need to {redesign/add a workaround/use a lower performance design} because of “leaks”. If you can’t demonstrate an attack they will assume the issue isn’t exploitable.

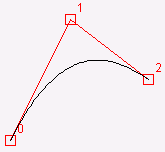

So the attack is (very basically, if I understand correctly)

Setup:

- I control at least one process on the machine I am targeting another process on

- I can send data to the target process and the process will decrypt that

Attack:

- I send data that in some intermediate state of decryption will look like a pointer

- This “pointer” contains some information about the secret key I am trying to steal

- The prefetcher does it’s thing loading the data “pointed to” in the cache

- I can observe via a cache side channel what the prefetcher did, giving me this “pointer” containing information about the secret key

- Repeat until I have gathered enough information about the secret key

Is this somewhat correct? Those speculative execution vulnerabilities always make my brain hurt a little

The more probable answer is that the NSA asked for the backdoor to be left in. They do all the time, it’s public knowledge at this point. AMD and Intel chips have the requisite backdoors by design, and so does Apple. The Chinese and Russian designed chips using the same architecture models, do not. Hmmmm… They have other backdoors of course.

It’s all about security theatre for the public but decrypted data for large organizational consumption.

I don’t believe that explanation is more probable. If the NSA had the power to compell Apple to place a backdoor in their chip, it would probably be a proper backdoor. It wouldn’t be a side channel in the cache that is exploitable only in specific conditions.

The exploit page mentions that the Intel DMP is robust because it is more selective. So this is likely just a simple design error of making the system a little too trigger-happy.

They do have the power and they do compel US companies to do exactly this. When discovered publicly they usually limit it to the first level of the “vulnerability” until more is discovered later.

It is not conjecture, there is leaked documents that prove it. And anyone who works in semiconductor design (cough cough) is very much aware.

If you can’t demonstrate an attack they will assume the issue isn’t exploitable.

Absolutely. Theory doesn’t always equal reality. The security guys submitting CVE’s to pad their resumes should absolutely be required to submit a working exploit. If they can’t then they’re just making needless noise

There are definitely bullshit cves out there but I don’t think that’s a good general rule. Especially in this context where it’s literally unpatchable at the root of the problem.

newly discovered side channel

NSA: “haha yeah… new…”

This is the best summary I could come up with:

A newly discovered vulnerability baked into Apple’s M-series of chips allows attackers to extract secret keys from Macs when they perform widely used cryptographic operations, academic researchers have revealed in a paper published Thursday.

The flaw—a side channel allowing end-to-end key extractions when Apple chips run implementations of widely used cryptographic protocols—can’t be patched directly because it stems from the microarchitectural design of the silicon itself.

The vulnerability can be exploited when the targeted cryptographic operation and the malicious application with normal user system privileges run on the same CPU cluster.

Security experts have long known that classical prefetchers open a side channel that malicious processes can probe to obtain secret key material from cryptographic operations.

This vulnerability is the result of the prefetchers making predictions based on previous access patterns, which can create changes in state that attackers can exploit to leak information.

The breakthrough of the new research is that it exposes a previously overlooked behavior of DMPs in Apple silicon: Sometimes they confuse memory content, such as key material, with the pointer value that is used to load other data.

The original article contains 744 words, the summary contains 183 words. Saved 75%. I’m a bot and I’m open source!

I get to this part and feel like I’m being trolled.

“meaning the reading of data and leaking it through a side channel—is a flagrant violation of the constant-time paradigm.”

Any chance of recall?

Nope since it’s an intended feature.

So does this mean it’s being actively exploited by people? How screwed is the average person over this?

Considering how bad apple programmers are I am not surprised. I created an apple account just so I can get apple tv. Sadly I made the account on tv.apple.com and didn’t make a 2fa so now I can not log into appleid.apple.com or iCloud.com or into my Apple 4k+

This happened this week…

I think it’s not the devs, but the managers who push the devs. It usually is. In Apple’s case the managers want devs to desperately keep everything in such a a way that it only works with Apple, cant work with anything else or we might lose a customer! It probably won’t have caused this particular problem, but you get the idea.

Cough NSA Cough cough

I have a dormant apple account for I had an iPhone before. The annoying thing about that account in particular is that I need an apple device to manage that, so without it I can only hope to remember that password correctly. But setting this aside, that iPhone was a neat little phone and I do miss it at times.

I somehow doubt they have the same people building online apps and the designing processors.

A revolution in composition. First titanium (making phones somehow less durable), and now they can’t even keep their own chips secure because of the composition of the chip lol

That is some grade A armchair micro architecture design you got going there.

Apple completely switched their lineup to the obvious next big thing in processing in less than two years, improving efficiency and performance by leaps and bounds. It has had astounding improvements in terms of generating heat and preserving battery life in traditional computing and they did it without outright breaking backward compatibility.

But oh, it turns out three years later someone found an exploit. Guess the whole thing is shit then.

edit: oh yeah and traditional x86 manufacturers have had the same type of exploit while still running hot

edit2: This is not to say Apple is our holy Jesus lord and saviour, they’re plenty full of shit, but the switch to ARM isn’t one of those things

I don’t use any Apple products and am not planning to change this in the near future, but man, the stuff I’ve read and seen about the M chips did left me absolutely amazed.

This is Lemmy. What were you expecting?

Well, this is Lemmy. What were YOU expecting?

Questioning my expectations for pointing out bullshit is… bullshit.