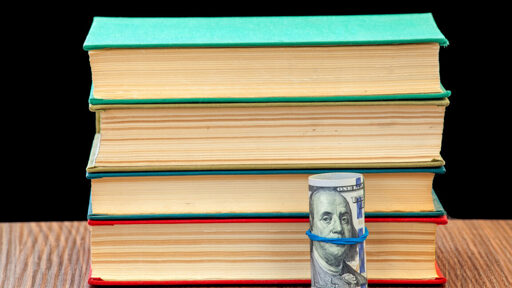

Authors revealed today that Anthropic agreed to pay $1.5 billion and destroy all copies of the books the AI company pirated to train its artificial intelligence models.

In a press release provided to Ars, the authors confirmed that the settlement is “believed to be the largest publicly reported recovery in the history of US copyright litigation.” Covering 500,000 works that Anthropic pirated for AI training, if a court approves the settlement, each author will receive $3,000 per work that Anthropic stole. “Depending on the number of claims submitted, the final figure per work could be higher,” the press release noted.

Not nearly enough. $3k for your work, and they can just do it again. If I was the judge I’d be saying it’s an order of magnitude too low.

Eat fuckin shit you slop fuckers.

What happens to the information from these works that their LLMs have already ingested? Does Anthropic just get to keep whatever information they got? Can they even remove it if they were ordered to? I read the article but I’m not sure if this was answered in there.

I didn’t see anything about the models trained on the data. I see that they can litigate against past and future infringing output, but should the model itself not be an issue?

Guess they should just raise a series Z round for $100 billion more.