- cross-posted to:

- technology@beehaw.org

- cross-posted to:

- technology@beehaw.org

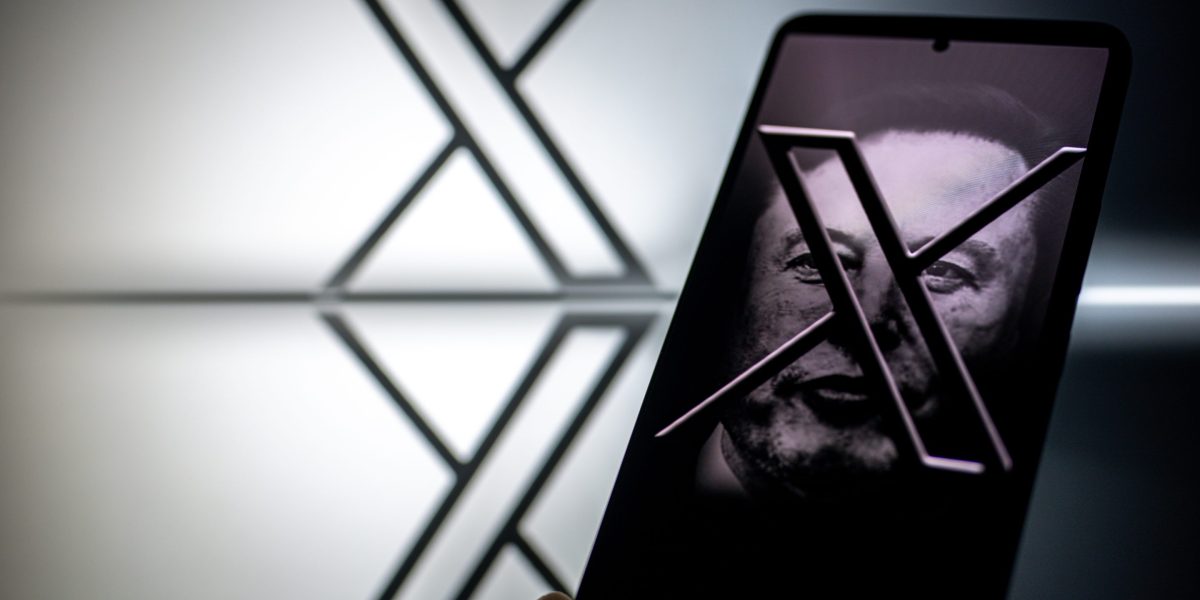

Inside the shifting plan at Elon Musk’s X to build a new team and police a platform ‘so toxic it’s almost unrecognizable’::X’s trust and safety center was planned for over a year and is significantly smaller than the initially envisioned 500-person team.

mirror: https://archive.vn/ghN0z

I don’t believe that for one second. I’d believe it, if those numbers were reversed, but anyone who uses LLMs regularly, knows how easy it is to circumvent them.

EDIT: Added the paragraph right before the one I originally posted alone, that specifies that their “AI system” is an LLM.

AI is whatever tech companies say it is. They aren’t saying it for the people, like you, that knows it’s horseshit. They are saying it for the investors, politicians, and ignorant folks. They are essentially saying that “AI” (cue jazz hands and glitter) can fix all of their problems, so don’t stop investing in us.

That’s not about LLM. Recently I was doing an AI analysis of which customers will become VIP based on their interactions. The accuracy was coincidentally also 98%. Nowadays people equate AI and LLM, but there’s much more to AI than LLM.

I’m going off of the article, where they state that it’s an LLM. It’s the paragraph right before the one I originally posted:

EDIT: I will include it in the original comment for clarity, for those who don’t read the article.